All published articles of this journal are available on ScienceDirect.

Is High Fidelity Simulation the Most Effective Method for the Development of Non-Technical Skills in Nursing? A Review of the Current Evidence

Abstract

Aim:

To review the literature on the use of simulation in the development of non-technical skills in nursing

Background:

The potential risks to patients associated with learning 'at the bedside' are becoming increasingly unacceptable, and the search for innovative education and training methods that do not expose the patient to preventable errors continues. All the evidence shows that a significant proportion of adverse events in health care is caused by problems relating to the application of the 'non-technical' skills of communication, teamwork, leadership and decision-making.

Results:

Simulation is positively associated with significantly improved interpersonal communication skills at patient handover, and it has also been clearly shown to improve team behaviours in a wide variety of clinical contexts and clinical personnel, associated with improved team performance in the management of crisis situations. It also enables the effective development of transferable, transformational leadership skills, and has also been demonstrated to improve students' critical thinking and clinical reasoning in complex care situations, and to aid in the development of students' self-efficacy and confidence in their own clinical abilities.

Conclusion:

High fidelity simulation is able to provide participants with a learning environment in which to develop non-technical skills, that is safe and controlled so that the participants are able to make mistakes, correct those mistakes in real time and learn from them, without fear of compromising patient safety. Participants in simulation are also able to rehearse the clinical management of rare, complex or crisis situations in a valid representation of clinical practice, before practising on patients.

BACKGROUND

The increasing use of technology in healthcare, and higher public and patient expectations have both encouraged the development and use of innovative educational methods in healthcare education. The UK Government paper A High Quality Workforce - the Next Stage Review [1] recommended the development of a “strategy for the appropriate use of e-learning, simulation, clinical skills facilities and other innovative approaches to healthcare education” (p.42). The use of simulation has also been encouraged in a number of key Government documents worldwide, such as the US Institute of Medicine’s report To Err is Human: Building a Safer Health System [2].

More recently, the UK Government White Paper A Framework for Technology Enhanced Learning [3] argued that Innovative educational technologies, such as simulation provide unprecedented opportunities for health and social care students, trainees and staff to acquire, develop and maintain the essential knowledge, skills, values and behaviours needed for safe and effective patient care. Simulation is seen as an effective educational strategy that may be used to address the growing ethical issues around 'practicing' on human patients [4] and may therefore provide an effective way to increase patient safety, decrease the incidence of error and improve clinical judgment [5].

The potential risks to patients associated with learning 'at the bedside' are becoming increasingly unacceptable, and the search for education and training methods that do not expose the patient to preventable errors from inexperienced practitioners continues [5]. As Hobgood et al. [6] note, all the evidence shows that a significant proportion of adverse events in health care are caused by problems relating to the application of the 'non-technical' skills of communication, teamwork, leadership and decision-making. These are the cognitive and social skills that complement technical skills to achieve safe and efficient practice. Non-technical skills are seen as distinct from psychomotor skills [7], since they involve interactions between team members (e.g. communication, teamwork and leadership) or thinking skills such as the ability to read and understand situations or to make decisions, all of which assist with task execution. The accurate and consistent measurement of non-technical skills has been the subject of much debate in recent times [8, 9].

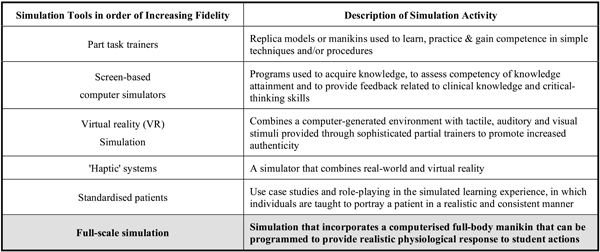

A TYPOLOGY OF SIMULATION ACTIVITY

Fidelity in simulation has traditionally been defined as 'the degree to which the simulator replicates reality [10]'. Using this definition, simulators are labelled as either 'low' or 'high' fidelity depending on how closely they represent 'real life'. In order to simplify the definition of high fidelity simulation, the authors used a typology (Fig. 1) based upon that proposed by Cant & Cooper [11] which defined high fidelity simulation in terms of 'simulation that incorporates a computerised full-body manikin that can be programmed to provide realistic physiological response to student actions'.

A typology of fidelity within simulation-based education adapted from Cant & Cooper [11].

AIMS

Firstly, to review the body of evidence regarding the use of high fidelity simulation (HFS) in the development of non-technical skills for nurses, and secondly to identify the implications of the findings for nurse education and for future research in simulation.

SEARCH METHODS

A systematic search of the literature was undertaken for all articles in written in English between January 2000 and January 2011. The databases used were Web of Science, Ebsco host (CINAHL Plus, ERIC, Embase, Medline), Cochrane Library, SCOPUS, Science Direct, ProQuest and ProQuest Dissertation and Theses Database. Reference lists from relevant papers and the websites and databases of other simulation organisations (e.g. Society in Europe for Simulation Applied to Medicine) were also searched.

Search Terms

Nurs*, midw*, pract*, simulat*, high fidelity, critical thinking, decision making, situation awareness, team*, manikin, educat*, teach*, facilit*, communicat*, human factors, leader*, cognit*

Quality Appraisal of the Studies

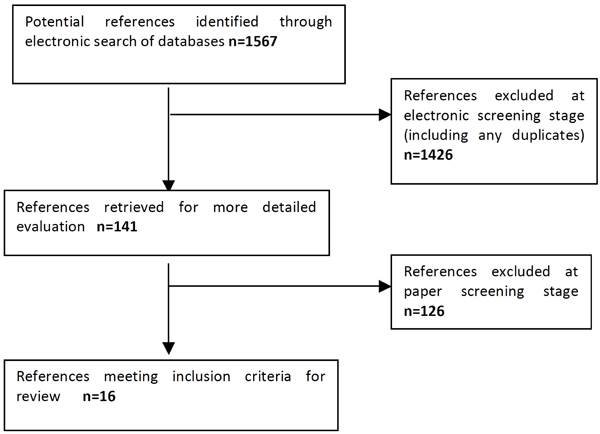

All three of the authors (RL/AS/MMS) were involved in the decision-making process (Fig. 2), and articles were included that were written in English between 2000-2011, involving either nurses or midwives using simulation as an educational strategy. In addition, the methodological approach had to be able to contribute to the body of evidence relating to simulation. It quickly became apparent from this, and other narrative reviews of the nursing simulation literature [11] that the traditional 'scientific' criteria used to assess the robustness of the studies did not necessarily apply.

Flow chart for study selection process.

In practical terms, the team agreed to exclude all qualitative and descriptive papers, and took a pragmatic decision to review the quantitative pre- and post-test studies, quasi-experimental and single-test studies available, even though the robustness of their design may be open to question. The papers were reviewed according to the quality criteria suggested for assessing randomised controlled trials and case control trials by the Critical Appraisal Skills Programme (CASP) [12]. The designs of the studies were noted, and whether description of each step of the research enabled any degree of assessment of the rigour of the data and/or the statistical analysis used.

In all 16 articles were considered for final inclusion for the review, and of those articles, the study designs and methods used varied considerably, with correspondingly differing levels of validity and reliability demonstrated. In terms of the relative rigour of the papers, there were 3 RCTs, and 7 pre-test/post-test experiments or quasi-experiments using simulation as the educational intervention, of which 5 compared simulation with a control group taught by more traditional education methods. There were 6 other studies that used single interventions and simple post-test designs, and although these studies are not considered to be as robust as the experimental and quasi-experimental designs, the pragmatic decision was taken to include them for the purposes of completeness. This paper will therefore review the evidence for using simulation in relation to the development of the non-technical skills of interpersonal communication, team working, clinical leadership and clinical decision-making.

COMMUNICATION

It is widely accepted that communication failures are one of the leading causes of inadvertent patient harm worldwide [13]. As Leonard et al. [14] note, formal training for effective communication and teamwork has historically been largely ignored. Unfortunately, the clinical environment in which healthcare takes place is becoming a progressively ever more complex 'socio-technical' system, and the inherent limitations of human performance mean that communication and team training have now become a significant aspect of healthcare training and education. Research into healthcare communication using simulation has tended to focus upon communication as an integral part of teamwork and team training however; there have been a small number of studies which have looked at the use of simulation in training for medical 'handover'. The handover of patient-specific information from one care giver to another has been identified as a high risk process, as it forms a patient safety-critical interface in which crucial information may be lost, inaccessible or misunderstood.

Most of these patient handover studies have used junior doctors or medical students [15], although one has involved nursing staff [16]. This pre/post test observational study took place in a 5 bedded medical 'step-down' High Dependency Unit, and used direct observation of the patient handover of 25 nurses, scored by a checklist. The educational intervention incorporated three simulation-based nurse shift handover scenarios into a teamwork and communication workshop. The authors found that there was a statistically significant increase in the quality and quantity of information handed over after the simulation intervention, and that communication using interpersonal verbal skills relevant to patient safety were improved.

TEAMWORK AND TEAM TRAINING

Effective teams are social entities that use shared knowledge, skills, attitudes, goals, and monitoring of own and others’ performance to achieve high quality teamwork [17]. Arising out of crew resource management (CRM) training in the aviation industry, healthcare team training typically involves the assimilation of learning theory principles and team behaviours, the practice of these, and the provision of high quality feedback [18]. As Baker et al. [18] note, the challenge for educators is that healthcare teams are often unpredictable, such that a group of competent individual professionals can combine together to create an incompetent team.

Teamwork in healthcare is further complicated by the fact that a number of individuals who have probably not previously worked with each other, and might not even be familiar with each other have to collaborate for the benefit of the patient in a complex and dynamic clinical environment. in addition, team composition and context may change constantly within a clinical environment characterised by quickly developing and confusing situations, information overload, time constraints, and high risks for patient safety.

'TeamSTEPPS'

Hobgood et al. [6] conducted a teamwork training and assessment exercise based around the one-day 'TeamSTEPPS' programme, developed by the US Agency for Healthcare Research and Quality (AHRQ). The study compared four different contemporary teaching methods. These were didactic lecture (control), didactic video-based session with student interaction, role play and human patient simulation. Over 400 medical and nursing students were randomly allocated to one of the four teaching groups. This large scale RCT used a number of validated teamwork measurement tools to assess the effectiveness of the four methods, and found that although each of the different methods showed an improvement in teamwork efficacy, there was no significant difference in improvement between the methods.

The authors do acknowledge that the design of the study may have limited its ability to discern significant differences in the effectiveness of the educational interventions, particularly in the simulation and role play groups. Participants were also randomised into cohorts without considering individual learning styles. In addition, the lack of any TeamSTEPPS specific instruments limited the authors' confidence in their scoring of team behaviours. They also noted that many participants had no previous experience with high-fidelity simulation prior to the training, and argued that this could have hindered their participation in the scenarios. Furthermore, the time allotted for students to participate in the simulated scenarios (30 minutes per scenario, 1 hour in total) was limited, and this may have negatively affected the educational effect of the interactive experiences. One of the most valid points that the authors raise, and this is true of the majority of simulation studies, is that the participants were not observed longitudinally, and therefore questions remain about the impact of various pedagogies in changing long-term behaviour in clinical situations. Subsequent measures of skill retention some days or weeks after the intervention would have strengthened the results significantly.

Robertson et al. [19] adapted the TeamSTEPPS programme for medical and nursing students. Using a quasi-experimental pre/post-test design, they studied the effect that participation in a team training programme using a modified version of TeamSTEPPS would have upon students' attitudes towards teamwork skills. 213 students participated in a 4-hour team-training program that included a lecture followed by small group team training exercises involving simulation and video clips of teamwork. They found that students improved their knowledge of vital team and communication skills, attitudes toward working as teams, and identification of effective team skills.

'MedTeams'

The 'MedTeams' programme was developed by the US Military to address teamworking in high intensity combat trauma situations. It is interesting to note that the original MedTeams programme did not contain any simulation [20], although this was quickly addressed. Holcomb et al. [21] undertook the pilot simulation study in which 10 military trauma teams were evaluated in their performance during a 28 day trauma rotation at a regional trauma centre. Each team consisted of physicians, nurses, and medics. Using simulation, teams were evaluated on arrival and again on completion of the rotation. In addition, the 10 trauma teams were compared with 5 expert teams composed of experienced trauma surgeons and nurses. Two simulated trauma scenarios were used, representing a severely injured patient with multiple injuries, and team performance was measured using a human performance assessment tool. The authors found that the 10 military teams demonstrated a significant improvement in team performance.

Shapiro et al. [22] conducted a further MedTeams study that looked at the effect of simulation in addition to an existing didactic team training programme (Emergency Team Co-ordination Course [ETCC]) upon trauma team performance in the emergency department (ED). Using a single blinded and controlled observational pre/post-test study design, nurses and doctors were randomly allocated to one of four interprofessional trauma teams, further divided into two intervention (simulation) and two control groups. All teams were subjected to pre- and post-test observation. The intervention groups participated in an 8 hour simulation session and were compared against the control groups that completed the same ETCC training but spent an 8 hour shift in the ED. Following the intervention, each group was observed and scored on team behaviour. The authors described an improvement in team performance and comparisons between pre-test and post-test scores of team behaviour showed the simulation group had improved, although the level did not reach significance (p = 0.07), while the group that completed a regular 8 hour ED shift showed no gains at all (p = 0.55). Shapiro et al. argue that following the simulation there was a perceived positive impact on teamwork behaviour in the clinical environment and despite the lack of statistical significance; they still considered the result to be important.

Paediatrics and Midwifery

There have been a number of studies that have looked at aspects of teamwork within specialised clinical contexts, such as paediatric and obstetric care. Messmer [23] for example looked at the role of simulation in the collaboration between doctors and nurses. Falcone et al. [24] also looked at simulation as part of a wider educational programme for multi-disciplinary trauma teams. Both of these studies observed the team behaviour of the participants in simulated emergency situations. In Messmers’ study, nurses and junior doctors participated in three life-threatening scenarios. In the Falcone et al. study emphasis was placed on team function and communication and also used three simulated scenarios to evaluate team performance. Both studies found an overall improvement in team performances.

The results from Messmer's study showed that intra-team collaboration and team cohesion improved with each successive scenario. The relationships within the team matured and the team communicated more with one another. They demonstrated recognition of the strengths of the members of each professional group to the team, and the participants began to understand the importance of each individual team member. The Falcone et al. study was part of a wider educational programme on team performance and communication during paediatric trauma management. The simulated scenarios were used to complement these educational activities as well as to measure team performance. As with Messmer’s study, Falcone et al. found that the teams performed more efficiently and appropriately in each successive scenario, as well as demonstrating an overall improvement during the subsequent longer term evaluation of the study programme.

There have also been a number of studies [25-27] that have used simulation for team training in the management of obstetric emergencies such as eclampsia and shoulder dystocia. Both Crofts et al. [25] and Ellis et al. [26] compared the effectiveness of simulation-based training for eclampsia as part of the 'SaFE' study. This large scale multi-centre RCT compared training in local hospitals and a regional simulation centre. Midwives working at participating hospitals were randomly assigned to one of 24 teams, and theses teams were randomly allocated to training in local hospitals or at a simulation centre, and to teamwork theory or not. Performance was evaluated both before and after training with a standardised eclampsia scenario captured on video. All the authors found that the simulation training resulted in enhanced performance and improved teamwork in the management of life threatening obstetric emergencies. Interestingly, although the study demonstrated that teamwork improved, the improvement was not linked to the inclusion of teamwork theory training.

It may be argued that simulation is the only practical and effective way to make healthcare professionals aware of and understand the importance of teamwork and the individual aspects of team performance at a distance from real patients. As Paris et al. [17] note, well-constructed simulation scenarios allow participants to explore the different roles within a team, including the important skills required for leadership.

CLINICAL LEADERSHIP

As with intra-team communication, clinical leadership is usually regarded simply as a component of team training education. In a recent study, Radovich et al. [28] used three high fidelity simulation scenarios to enable neophyte nurse leaders to develop their leadership skills during the management of difficult interpersonal situations. Radovich et al. found that the use of simulated scenarios increased the participants' self-confidence, and gave them a much better understanding of the skills required to manage the type of complex interpersonal situations that occur within healthcare teams. Although Radovich et al. focused upon the use of simulation from the perspective of an educational programme for nurse managers, the generic leadership skills that the simulated scenarios addressed (interprofessional communication, organisational understanding, crisis management and negotiation skills) are equally applicable to the skills required in leading teams in crisis situations.

SITUATION AWARENESS

Situation awareness (SA) is a relatively new concept within healthcare and is defined by Endsley [29] as ‘the perception of the elements in the environment within a volume of time and space, the comprehension of their meaning and the projection of their status in the near future’ (p. 36). However in simple terms, SA may be seen as knowing 'what is going on' in any given situation, and involves the individual's perception and understanding of what is happening, and their prediction of what may happen in the future. Good SA is now recognised as an integral part of competent clinical practice, particularly in crisis situations, however up until now it has not been actively measured in nursing or team performance.

Both Endacott et al. [30] and Cooper et al. [31] looked at both situation awareness (SA) and clinical decision making as part of a much wider study into the management of acute patient deterioration, and used the Situation Awareness Global Assessment Technique (SAGAT), a well-validated assessment tool for measuring SA. This was done as part of the assessment of 51 final year undergraduate student nurses undertaking two simulated scenarios relating to the management of an acutely deteriorating patient. Using a SAGAT questionnaire with 17 yes/no questions, both studies stopped the scenario at random points and looked at the students' global appreciation of their situation, their perception of the patient's physiological condition, their understanding of the clinical problem and how the scenario may progress. Overall they found that the students focused primarily upon the purely physiological aspects of the scenarios, and lacked a more global appreciation of the situations they were involved in. More recently, Cooper et al. [32] carried out a further study to examine Registered Nurses' management of deteriorating patients in a small rural hospital in Australia. They used a single intervention design, with 35 RNs undertaking two acute care OSCEs, during which their SA was assessed. They found that overall the RNs lacked SA and that their SA scores were correspondingly low. The authors argue that the use of simulation as an educational strategy would be a positive way to equip these RNs with the skills to better manage the acutely deteriorating patient. All three of these studies appear to indicate that nurses consistently lack SA, and that this will need to be addressed by nurse education in the future.

CRITICAL THINKING AND CLINICAL DECISION-MAKING

The dynamic nature of contemporary healthcare requires nurses to assume ever more complex roles, which in turn, necessitates the acquisition of higher level critical thinking skills. It is argued that the development of these skills enhances the practitioners’ ability to address complex or unfamiliar situations, and that nurses with high quality clinical reasoning skills will have a positive impact on patient outcomes, particularly when faced with complex care situations.

Both Howard [33] and Schumacher [34] compared the use of simulation as an educational intervention to develop students' critical thinking abilities. Critical thinking was defined as the ability to reason, deduce, and induce based upon current research and practice findings, which Howard argued was the foundation for sound clinical decision-making in nursing. Howard's study was a multi-site, quasi-experimental pre/post-test design, with a sample of 49 nursing students randomly allocated to the intervention group (simulation) or control (interactive case study) group. An exam was used to assess the students' critical thinking, and analysis revealed a significant difference in critical thinking ability with the simulation group scoring significantly higher on the post-test when compared to the case study group with respect to critical thinking abilities (p= 0.07). Using a similar definition of critical thinking, Schumacher compared three different educational strategies (formal classroom teaching, high fidelity simulation and a combination of the two methods). Like the Howard study, this study used an examination designed to measure application and analysis at a cognitive level. The issue here for both studies would seem to be the appropriateness of a written examination as a measurement of critical thinking.

Ravert [35] also compared the effects of regular education plus simulation, regular education plus case study and regular education alone on students' critical thinking skills. A pre-test/post-test design was used with a cohort of 64 undergraduate nursing students, with the sample divided into three groups (2 experimental groups and one control group). The control group participated in the regular education process alone. The non-simulation group had five case study sessions and the simulation group participated in five weekly, one-hour simulation sessions.

Pre- and post-test intervention evaluations of critical thinking were obtained through the administration of non-discipline specific, statistically valid and reliable instruments. Analysis of test scores revealed improvements in post-intervention test scores across all groups however differences among groups lacked statistical significance. Ravert acknowledged that results of the study should be interpreted with caution, insofar as neither of the critical thinking measurement instruments used had been designed specifically for use with nursing students.

DISCUSSION

There is much intuitive and anecdotal evidence for the effectiveness of simulation in the acquisition of non-technical skills in healthcare, however the research evidence is limited and often contradictory. One of the challenges for this review therefore was to identify why there are a number of studies which have produced equivocal results, and why some have found a significant difference between the impact of simulation and other educational methods, and some have not. It may be argued that one of the main reasons for the large number of equivocal results in simulation education, other than the methodological deficiencies in many of the studies, is that the researchers are simply asking the wrong questions, or looking at the wrong things in the wrong way.

Are we Missing the Point?

As Goodman & Lamers [36] note, all the evidence for the benefits of simulation may well be present in the data, however the key is the way in which the evidence is analysed and presented. They argue that the solution is to look at the evidence from a different perspective, for example that we could monitor aggregate rates of error rather than trying to measure and compare average scores. To illustrate their point, Goodman & Lamers use the findings of the Sears et al. study [37] in which there was a significant reduction in near-miss medication errors among nursing students in placements who substituted simulation training for some of their initial placement time (p < 0.01). Data were collected on medication errors (or near misses) by participants in both the control group (no prior simulation training) and the treatment group (simulation training) after both groups of participants had begun to administer medications to 'real' patients.

Goodman & Lamers argued that that the findings would not have been as clear had Sears et al. simply compared the average rate of successful drug administration in both groups. Given that the numbers of near-miss drug errors made by students in both groups were relatively small in comparison to the large number of successful drug administrations, this would have all but obscured the relative error rates in each group. They argue that the strength of the study was that it compared the relative error rates for both groups of participants, rather than the average error rate. This approach may have merit for studying specific skills such as drug administration, however the challenge would be to adapt it to study non-technical skills such as interpersonal communication during a medical or nursing patient handover.

Key Methodological Issues

Determining the effectiveness of simulation education when compared with other methods of education is inevitably complicated by the lack of robust evidence, and few studies could be directly compared due to various experimental designs delivering a range of intervention strategies. Although most studies had quasi-experimental designs with some random assignment to groups from particular student cohorts, the characteristics of non-participants were often unknown. Participant recruitment was predominantly by convenience sampling and the characteristic of those who did not volunteer (or were excluded from the studies) is usually not made clear. In the majority of studies sample sizes tended to be small (<100) and were usually non-representative. Although traditionally seen as an important indicator of study rigour, the issue of sample size is less of an issue in simulation studies since the recruitment of large numbers of participants for this type of study is often complicated and technically difficult to achieve. The smaller sample sizes found in this type of study are compensated for by both the richness of the data and the use of mixed methods approaches, such as the use of retrospective video review.

The Temporal Dimension

As Hobgood et al. [6] noted, there was no longitudinal dimension to many of the studies, and this prevents the studies from drawing any real conclusions regarding clinicians' long-term behaviour change. There was also significant variability in the timing of the assessment of educational outcomes, as in some studies outcomes were assessed immediately after the intervention and in some studies they were assessed at an arbitrary time after the intervention had taken place. There is no rationale or explanation given for these differences, and as a result there is clear potential for bias. These variations severely limit the ability of this review to draw any inferences from the studies described. In some, but not all studies, group differences such as previous clinical experience or knowledge were controlled. Several studies were confounded by very limited exposure to a simulation experience, and there was a lack of consistency or any rationale provided for the length of simulation exposure. Typically simulation exposure ranged from one 15–20 minute session only to weekly simulation sessions each of 90 minutes over 5 weeks, and this makes it very difficult to draw meaningful conclusions as to the utility of simulation.

Comparison of Simulation with Other Interactive Teaching Methods

A number of studies have found little significant differences in the effectiveness of simulation when compared to other similar educational strategies. Ravert [35] for example, found that both of the intervention groups in the study increased their critical thinking skills, and reported significant gains in self-efficacy, although the simulation group did not significantly outperformed the control group in any aspect of the study. The reality may be that modern teaching methods such as case study discussions are much more interactive when compared to more traditional didactic methods such as lectures. Problems may arise therefore when comparing simulation with these other modern, interactive teaching methods, particularly when studying issues such as self-confidence or critical thinking.

CONCLUSIONS

In recent times there has been a broad acceptance of simulation in healthcare education, although the studies attempting to assess its worth have found hard data to confirm the effectiveness of simulation elusive, particularly in relation to non-technical skills [38]. It should be remembered that in comparative terms simulation is still a relatively new educational strategy in healthcare education, and consequently the evidence base for simulation is comparatively small, although growing quickly.

It would be very easy to dismiss simulation due to the relative lack of 'hard' evidence as to its effectiveness, particularly in the field of nurse education. The most important point to make, supported by the evidence from simulation-based medical education, is that whilst there are no negative findings, there are many positive findings that we may draw upon. For example, simulation is positively associated with significantly improved interpersonal communication skills at patient handover [16], and it enables the effective development of transferable, transformational leadership skills [28]. It has also been clearly shown to improve team behaviours in a wide variety of clinical contexts and clinical personnel, associated with improved team performance in crisis situations [10, 19, 21-22, 23-25]. Simulation has also given an insight into how we might better address the development of situation awareness in nursing students, and provided the direction for further study in this area [30-31]. From a cognitive skills perspective, simulation has also been demonstrated to improve students' critical thinking and clinical reasoning in complex care situations, using a number of different measuring tools [33-35] and to aid the development of students' self-efficacy and confidence in their own clinical abilities.

As already noted earlier, there may be other educational strategies (e.g. interactive case studies) that also provide participants with these skills, and indeed a number of the studies in this review did use simulation to complement other educational strategies. It should be noted however that the findings from the 'SaFE' study [25-27] indicated that there was no benefit in the use of traditional didactic team teaching in combination with simulation. What is clear from this review and others, is that simulation is able to provide participants with an interactive and immersive learning environment in which to learn, that is safe and controlled so that the participants are able to make mistakes, correct those mistakes in real time and learn from them, without fear of compromising patient safety. Participants in simulation are also able to rehearse the clinical management of rare, complex or crisis situations in a valid representation of clinical practice, before practising on patients. In summary, the most powerful single argument for simulation remains that participation in simulation-based education appears to help to prevent participants from making mistakes in the future, by providing a set of clinical circumstances in which it is permissible to make mistakes and learn from them.

CONFLICTS OF INTEREST

The authors confirm that this article content has no conflicts of interest.

ACKNOWLEDGEMENTS

RL drafted the manuscript. Thanks to ANS & MMS who read and commented upon multiple drafts of the manuscript. All of the authors approved the final manuscript.