All published articles of this journal are available on ScienceDirect.

A Systematic Review and Narrative Synthesis of the Effectiveness of Peer- versus Faculty-led Simulation for Clinical Skills Acquisition in Undergraduate Student Nurses. MSc Project Findings

Abstract

Background:

Clinical skills learning is an integral part of undergraduate nursing programmes in United Kingdom nurse education. Faculty staff teach some elements of clinical skills, and some are taught by clinicians in practice. International evidence indicates that some students feel overly anxious when taught by faculty members but less so with their peers, meaning that peer-led clinical skills teaching and learning might reduce anxiety and facilitate the acquisition and retention of skills education.

Objective:

The objective of this systematic review was to explore the research relating to undergraduate student nurses’ acquisition of skills within the simulation setting, particularly the associations between peer-led and lecturer-led learning.

Methods:

A systematic review of the literature was used to find all available evidence. A search of nine healthcare databases using Boolean and MeSH search terms including ‘Peer-to-peer’, ‘Clinical Skill*’, ‘Simulat*’, and ‘Student Nurs*’ was undertaken. Due to the heterogeneity of the research found, statistical meta-analysis was not possible, and so a narrative synthesis based on thematic analysis was conducted, which involved three-person research team critically appraising nine articles for inclusion in the review.

Results:

Articles were located from worldwide sources.

Three main themes in the findings were: psychological factors, motor skills, and educational issues. The use of peers can help to increase students’ motor skills, improved the psychological impact of skills and learning, and offered students a chance to be active participants in their education.

Conclusion:

Having explored the literature, we conclude that peer-to-peer teaching and learning could have a place in undergraduate nursing education; however, it is not clear if student nurses’ skills acquisition is more effective if mediated by peer- or lecturer-led teaching. Further research is required in this area to quantify and compare outcomes.

1. INTRODUCTION

In nurse education in the United Kingdom (UK), there is an element of clinical skills teaching and learning for practical skills. Some of this takes place in students’ practice placement areas, formalised in university facilities such as simulated skills settings. In academia, these skills are regarded as a critical component of every undergraduate nursing programme [1]. From September 2017, and with the advent of degree nurse apprenticeships and other non-traditional routes into nurse education, many students gained direct access into stage two of the nursing curriculum (having achieved appropriate hours and experience), and they brought experience and knowledge of clinical skills that could be utilised in teaching peers without such backgrounds [2-7]. The UK Council of Deans for Health [8] has suggested that Higher Education Institutions (HEI) support students to be equipped with clinical skills, as well as with the ability to teach others, and this has influenced some of the changes to the competencies introduced by the Nursing & Midwifery Council (NMC, 9) 2018 Standards of Student Supervision and Assessment. The NMC [9] describes simulation as an artificial representation of a real-world practice scenario that supports student development and assessment through experiential learning with the opportunity for repetition. Simulation can be conducted in placement and/or in the university setting; however, the emphasis is on supporting students with what could potentially be real life situations in an environment that is supportive and educational where a student’s knowledge can develop. The number of hours permitted in simulated settings has increased, meaning that HEIs can conduct more clinical skills in the simulation setting [3], and now undergraduate nurses, in their final year, can undertake a peer-teaching session with junior students [10] as an opportunity to supervise and teach a junior learner/colleague in practice [11].

All of these regulatory and professional developments suggest a renewed emphasis in the UK for exploring the potential for peer-led learning of skills. ‘Peer’ denotes similarity in rank, character, or status and comes from the Latin ‘par’ meaning ‘equal’. In relation to student nurses, this correlates with which year the student is currently in. Davis and Richardson [12] describe peer-to-peer methods of facilitating learning as creating a supportive relationship between students of the same year as each other. They further discuss that peer-learning complements adult learning theories as it unifies cognitive, social, and constructivist theories [12]. A closely related theory is that of near-peer teaching, which is referred to [13] as students of senior educational years teaching students of junior years. This method of peer-teaching has been used more in medical education than nursing; however, in Australia, they have recently examined its usage in nurse education [14] but found little evidence to support its benefits within the simulation setting compared with lecturer-led teaching sessions.

Two systematic reviews inform the background to this current study, which discuss the benefits of peer-to-peer teaching and learning [15, 16]. Nelwati et al. [15] described peer-to-peer learning as being an effective and innovative intervention for undergraduate education, and Stone et al. [16] further complemented this by discussing how students involved in peer-to-peer learning are encouraged to take an active role in their education, thus moving away from the behaviourism-based teaching methods and more towards social-constructivism. Both reviews found that peer-to-peer learning benefited students but found there were ambiguities around defining terminology within peer-to-peer descriptors. There were findings of increased confidence amongst students and a greater satisfaction between peers. Both reviews noted that students perceived peer-to-peer interactions as favourable; in particular, there was a reduction of stress and anxiety. However, one disadvantage discussed was that student peer-to-peer teachers required a level of supervision to ensure the validity of knowledge passed between peer-to-peer students and learners, to avoid misinformation, thus still requiring faculty or clinical demonstrators to be present.

Although peer-teaching has been documented within medical education rather than nurse education, there is still a gap in how those students are taught the skills of a peer-tutor. Burgess and McGregor [17] conducted a systematic review of 19 articles examining the design, content, and evaluation of student peer-teaching programmes across healthcare professionals and noted that although teaching is an increasingly recognised core skill for all healthcare professionals, training is limited to medical programmes and voluntary. Rees et al. [18] also noted similar findings and added that although there was evidence to support the use of peer-tutors, there was a paucity of evidence of how those skills were taught. Therefore, before student nurses can become peer-tutors, they will need to learn the skills necessary such as feedback, feed-forward, and assessment.

Gray et al. [19] examined how the presence of academic faculty during peer supported skills sessions was positive but did not discuss if students preferred peer or faculty led sessions. However, the authors did discuss that students found working with peers a supportive and stress-free environment. Despite that, Gray et al. [19] found that there was a risk of collusion if the skill being assessed by a peer formed part of an assessment. They found that having academic faculty to facilitate and supervise ensured consistent and safe practice. This demonstrates that although the use of a peer can reduce a student’s anxiety levels, the need for faculty and clinical demonstrators is still needed [20].

Given the UK NMC’s (2018) regulatory changes and the growing body of research on peer-led teaching and learning in medical education and internationally, it was time to conduct a systematic review to synthesise available evidence about peer-to-peer teaching and learning compared with lecturer-led teaching to answer the question ‘What are the most effective methods of assuring clinical skills acquisition in simulation settings in undergraduate student nurses, peer-to-peer or lecturer led methods?’

2. METHODS

Following recent changes to the registration criteria, it was not possible to register this review with PROSPERO [21], as it requires a health-related outcome; however, the PROSPERO protocol was used and followed.

2.1. Search Strategy

Based on the review question, the following PICO was generated:

P – Population – Undergraduate nursing students

I – Intervention – Peer-to-peer teaching

C – Comparator – Clinical simulation settings using teacher/tutor/lecturer/clinical demonstrator led teaching.

O – Outcome – Skill acquisition

A search strategy was created using the following academic databases:

• AMED.

• CINAHL.

• Cochrane.

• EMBASE.

• Internurse.

• Joanna Briggs Institute.

• Medline.

• National Institute for Health and Clinical Excellence.

• Open Grey.

MeSH (Medical Subject Headings) terms were used to search for relevant articles. MeSH terms within Boolean search engines help locate the relevant articles related to the concepts of the topic, allowing for a targeted search [22].

2.2. Details of Inclusion Exclusion Criteria.

The following inclusion criteria were applied:

• Undergraduate nurse;

• Simulation setting;

• Clinical skills;

• Human patients.

Despite the extensive search strategy and inclusion criteria, some papers were retrieved relating to other professions, and so the following exclusion criteria were added:

• Non-healthcare student;

• Non-English language;

• Studies not in the simulation setting.

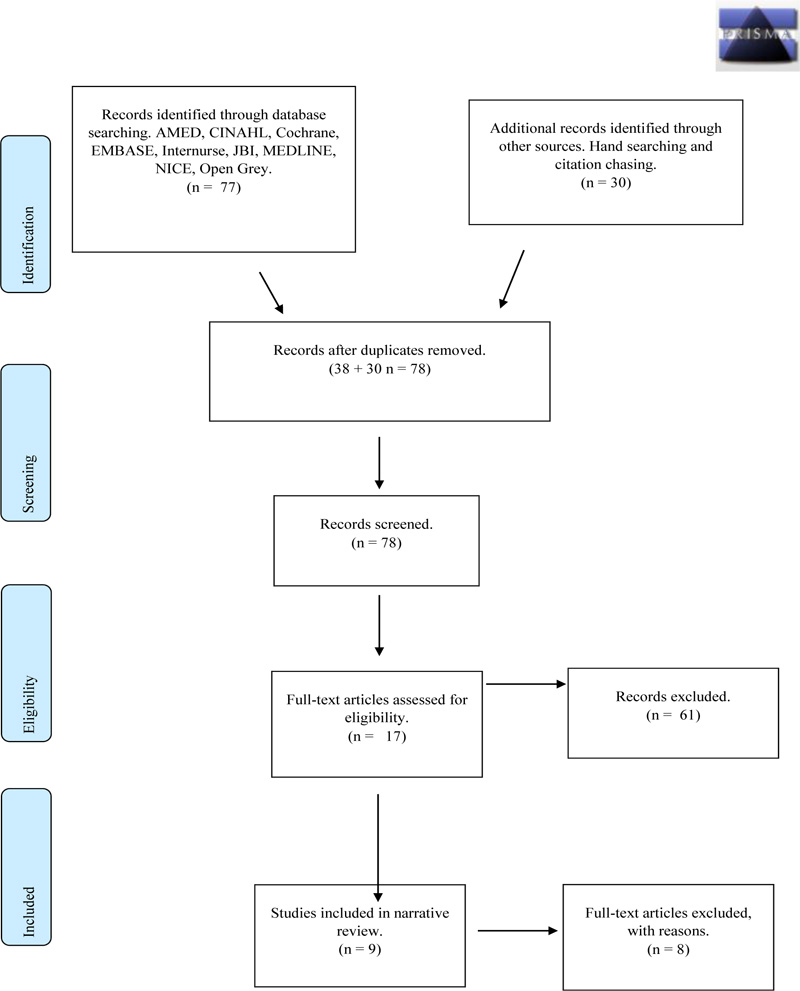

The following PRISMA diagram (Fig. 1) shows the summarised results of the performed searches with exclusion and inclusion criteria added.

2.3. Study Selection and Quality Appraisal

This was an iterative process of reading, applying inclusion/exclusion criteria until a final number of articles were achieved that could be appraised. Initially, many articles were duplicated through different database searches; these were removed before filtering through the articles for relevance. This had originally created a database of 77 articles, but once the duplicates were removed, this was reduced to 38. As many of these articles were discussing the concept of peer-teaching, a hand search of the reference lists and citation chasing was conducted to ensure any articles missed during the inclusion/exclusion search phase could be identified and located [23]. In a total, further 30 articles were found that suggested in their title and abstract that they would be relevant to this review; this increased the articles that needed to be scrutinised to 78. Following this process, 61 articles were rejected on the grounds of relevance to the question and inclusion/exclusion criteria. The remaining 17 articles were to be appraised by the review team.

The Joanna Briggs Institute [24] (JBI) has a 13-tool appraisal internationally recognised by the research community [25]. The JBI tools were able to appraise quantitative, qualitative, and systematic reviews but did not have a separate tool for mixed methods or survey research/literature. Therefore the Mixed Methods Appraisal Tool (MMAT) [26] and the Centre for Evidence Based Medicine (CEBM) [27] Critical Appraisal of a Survey tool were for those study designs.

| Peer to peer OR Peer-to-peer OR Peer teaching OR Peer-teaching OR Peer learning OR Peer-learning OR Peer led OR Peer-led OR Student led OR Student-led OR Peer facilitated OR Peer-facilitated OR Peer assisted learning OR Peer-assisted-learning OR Peer mentor* OR Peer-mentor* OR Peer tutor* OR Peer-tutor OR student-centred learning |

| AND |

| Clinical Skill* OR Skill* OR Skill* acquisition OR Skill* achievement OR Skill* learning OR Skill* teaching OR Skill* facility* OR skill* simulat* OR simulat* |

| AND |

| Student nurs* OR Under-graduate nurs* OR Undergraduate nurs* OR Lecturer led OR Lecturer-led OR Facilitator led OR Facilitator-led OR Peer led OR Peer-led |

| NOT |

| Engineering students OR Dentist* students OR pharmacy students OR Medicine OR Veterinary students |

Three researchers independently reviewed the final papers. A process of blinding the critical appraisal process between reviewers and using multiple reviewers was undertaken to ensure that selection bias is reduced or eliminated [28]. Each reviewer appraised all 17 articles, giving them a numerical value based on the tool used. Once a numerical value was established, this number was converted into a percentage. Any article unanimously scoring below 70% was automatically rejected; this figure was set in order to include high quality research studies. Following the blind review, all 17 articles were discussed in a face-to-face meeting where any discrepancies could be resolved. Of the 17 articles following the PRISMA diagram and inclusion/exclusion criteria, eight articles were scored low by the raters (GW, AS, and DC, all university lecturers with some experience of critical appraisal), resulting in nine articles being selected for the final review.

| Author | Methodology | Method | Country | Participants | JBI Tool |

|---|---|---|---|---|---|

| Curtis, et al. [32] | Quantitative. Quasi-experiment | PRE-POST TEST WITH Questionnaire | USA | 637 | JBI Quasi-experimental |

| Kim and De Gagne [31] | Quantitative. Quasi-experiment | Questionnaire Control group | USA | 57 | JBI Quasi-experimental |

| Brannagan et al. [33] | Quantitative. Quasi-experiment | pre-post survey | USA | 230 | JBI Quasi-experimental |

| Roh et al. [29] | Quantitative. Quasi-experiment | Likert style survey | Korea | 65 | JBI Quasi-experimental |

| Li et al. [35] | Mixed | Q-methodology, survey + Interviews | China | 40 | MMAT |

| Kim-Godwin et al. [30] | Quantitative Cohort | Likert style survey | USA | 50 | JBI Cohort |

| Turnwall [34] | Integrative review | Integrative review | USA | N/A | JBI SR |

| Nelwati et al. [15] | Systematic review | Systematic review | Malaysia/Indonesia | N/A | JBI SR |

| Stone et al. [16] | Systematic review | Systematic review | Australia | N/A | JBI SR |

3. RESULTS

A narrative synthesis of the findings was completed as the literature did not show the required homogeneity needed for meta-analysis [23].

Of the articles chosen for the narrative synthesis, five were quantitative [29-33], three were systematic reviews [15, 16, 34], and one was of mixed methods [35]. There were no qualitative articles suitable to be included, which could have helped to understand the experiences of students involved in peer-to-peer learning. However, the mixed methods study [35] did allow for students to discuss how they felt about the supportive concepts of peer-tutoring in the simulated settings. The methodologies of the studies and their geographical locations are shown in Table 2.

The included studies will now be critically appraised based on their methodology, using the relevant JBI tools.

3.1. Quantitative Study Designs

The four quasi-experimental articles reviewed used similar methods to collect their data [29, 31-33], all using pre and post-test interventions or post-test questionnaires. This is common in quasi-experimental research and is ubiquitous in social sciences and healthcare [36]. Such ‘before and after’ studies examine cause and effect relationships [37, 38].

Two studies [29, 31] researched the debriefing of students following a simulation experience. They clearly defined their intervention and that they were examining the differences of debriefing methods between instructor-led and peer-led on students’ nursing skills, knowledge, and self-confidence following a simulation using a standardised patient. Brannagan et al. [33] highlighted the role of the peer-teacher and its impact on learning experiences, whereas Curtis et al. [32] evaluated how peer-to-peer facilitation of a mid-fidelity simulation experience could improve active engagement.

Although Curtis et al. [32] did not use a control group, the others did, which should allow analysis of differences between groups as opposed to a Hawthorne effect. Gray [39] further discuss that using a control group allows the researcher to show the difference between the independent and dependant variables. Therefore, control groups are useful to demonstrate that the intervention has an effect on the randomly assigned participants over the control group but finding a group with the same key variables such as age and gender can prove difficult [39].

Nevertheless, randomly assigning participants to either a group receiving peer-led teaching/instruction or a control group offers a greater internal validity to the research design and strengthens the reduction of bias that can be manipulated by the researcher [37, 39]. The randomisation of participants improves control over internal validity with justification for believing that the change has been a result of the intervention [39].

Roh et al. [29] and Kim-Godwin and De Gagne [31] both used a non-equivalent control group with pre-test and/or post-test design. Kim-Godwin and De Gagne [31] studied 57 third year nursing students, 26 receiving instructor led debriefing and 31 receiving peer-led debriefing. These students were randomly assigned to a group by administrative staff, demonstrating rigour by reducing the possibility of allocation bias [40]. Whereas Roh et al. [29] recruited 65 third year students that were randomly assigned to groups either receiving peer-led or instructor-led debriefings post simulation. The use of non-equivalent control groups allows for both groups to be measured post intervention, but as there is no pre-test, there will be little evidence to support the effects the variables could have had on each group [36]. A further weakness of this design is that participants can easily identify if they were in the group receiving the intervention or not, and this could influence the dependant variable, either consciously or subconsciously [41]. This again can bias the results and not demonstrate the true outcome through the intervention.

Curtis et al. [32] used a post-test intervention only to evaluate student’s self-confidence in their clinical skills following a simulation experience. Post-test only design is described by Harvey and Land [40] as a ‘basic’ form of assessing the independent variable but it has limitations as it does not take into consideration any pre-intervention data. This weakness was not identified in the research and could have been addressed had there been a pre-test added to the design. However, Curtis et al. [32] recruited 509 participants for their research, which can be considered a large post-test group.

Convenience sampling was used in two papers [29, 33] [29, 31] did not describe their sampling process, they used participants easiest to recruit by nurse academics, these being student nurses. This method of participant recruitment could influence bias as the participants may have volunteered for the research as they have interest in the area being studied [38, 39].

All four quantitative studies [29, 31-33] indicated that participants had been randomly allocated to groups and that all groups receiving the intervention received the same exposure. However, only one [31] acknowledged that participants were randomly allocated using administrative staff and not study staff; this could help reduce the allocation bias [41]. Two others [29, 33] did state that their participants were randomly allocated to groups either receiving the intervention or not but did not discuss bias. This does not necessarily mean that bias occurred; however, the importance of identifying bias helps re-enforce rigour. Curtis et al. [32] did not acknowledge selection bias, but as they used a single group post-test questionnaire, all students were invited to partake in the research. However, they did state that there was missing data from some questionnaires (n=6), which was replaced using the estimated mean.

Kim-Godwin et al. [30] conducted a cohort study, of which one of the key elements is that all the participants have the same characteristics [42]. In this study undergraduate student nurses were enrolled in a community health nursing course. However, there were differences between groups as the first group received the simulation experience and the second group peer-evaluated it; following the first group, there was a role reversal. The second group only had a minor change in their scenario but had seen how they were likely to be assessed; thus, they were adjusting their practice to suit the assessment aims. Kim-Godwin et al. [30] did acknowledge these limitations in their work and that the research was only conducted on one group of students (n=50), making it limited. Had they used several cohorts all receiving the intervention the same as the first cohort, a greater comparison of the data could have been achieved, and findings would have been more valid. Key variables affecting the groups included age, educational backgrounds, and gender, all of which could have impacted the outcome. Although there was discussion on demographics and group sizes, it was difficult to establish how randomisation of groups or any methods of creating those groups were conducted. This could be considered acceptable as there is a diverse range within nursing students, and they come from different backgrounds; there is no such thing as a typical student nurse. Gray [39] states that not randomising group allocation will affect the outcome; it is impossible to say that both groups were equivalent. If one group achieved better results than the following group, it is possible that one group may have had an advantage over the other, this was acknowledged, but the use of further groups could have rationalised why this was the case. However, as both the groups in the research created comparable results, it could be said that there was parity between groups and there was little or no statistical significance and that the intervention influenced one group over the other [39]. Limitations of the students included using a small convenience sample size of differing ages and educational experience, again reducing the validity of the study. Nevertheless, Kim-Godwin et al. [30] accepted these limitations and discussed that further research into the area of peer-to-peer working is needed.

3.2. Systematic Literature Reviews

Three research teams conducted literature review processes for their research [15, 16, 34]. According to the JBI [43], systematic reviews are considered to be at the top of the pyramid of the hierarchy of evidence. Systematic reviews need to follow an exhaustive process that can be replicated by the reader and demonstrate that evidence included was of sufficient quality [42]. With this in mind, not all systematic reviews are at the top of the hierarchy of evidence. The use of tools to appraise the validity of the evidence collated within reviews allows the reviewer to either upgrade or downgrade the evidence used [44]. For instance, a review that used randomised controlled trials would be seen as being at the top of the hierarchy of evidence, whereas if the participants were not randomised, the evidence could be downgraded to a lower area of the hierarchy of evidence [44].

The term integrated review is relatively new and was introduced when research suggested that systematic reviews only used evidence from RCTs [23]. Furthermore, an integrated review includes both qualitative and quantitative evidence [23]. Whittemore and Knafl [45] stated that integrated reviews can enhance nursing research but can be lacking rigour, creating inaccuracies and bias by combining such diverse methodologies.

The three sets of authors that conducted a review process meticulously reported their methods, search strategies, and findings within their research [15, 16, 34]. Nelwati et al.’s [15] systematic review of qualitative research demonstrated that they had used the ENTREQ (Enhancing Transparency in Reporting the Synthesis of Qualitative Research) statement, which is a guideline for the synthesis of qualitative data [46].

Stone et al. [16] looked ascertain whether undergraduate student nurses benefited from peer-learning. They identified that there was a lack of research from the highest form of hierarchy, such as RCTs, as this method does not lend itself to assess students’ perceptions. However, many research articles explored students’ perceptions, using pre- and post-testing through to mixed methods of interviewing and questionnaires. They demonstrated that research into peer-learning is available, but due to the variations of research design, drawing meaningful conclusions could be difficult. Some researchers or policy makers may identify the research as ranking moderate to low due to a lack in research rigour [47]. Stone et al. [16] identified that research was likely to be from observational or case study research, which the JBI classification [43] would consider to be in the ‘moderate’ level of hierarchy.

The integrated review conducted by Turnwall [34] identified that research into peer-learning found papers coming from a variety of paradigms and methods. They investigated how peer reviewing prepares students to participate in the challenges of giving and receiving peer feedback. There were five stages described by Turnwall [34] that they used to conduct the review: problem solving, literature search, data evaluation, data analysis, and presentation. However, the author did not explicitly follow these, but a clear, concise, methodical process to their design was noted. Turnwall [34] discussed the background to why peer-learning and assessment are important within nurse education, and created a thematic analysis of all the articles found; however, as only one author was appraising each article, it did raise the issue of bias [48]. This limitation of Turnwall’s [34] research is the use of multiple raters to avoid bias.

None of the systematic reviews [15, 16, 34] established homogeneity due to the range of research methods used by the included articles. All the authors used English as inclusion criteria; this may be because the journals they were aiming for publication only used English or that the use of other languages could cause delays to a review. Two studies [16, 34] specifically noted that a particular limitation of their reviews was that they only used articles published in English. They included peer-reviewed articles only, and there is no acknowledgement of grey or unpublished research or that experts in their fields had to be contacted [39]. ‘Grey literature’ and other unpublished work can be a rich form of data, and disregarding grey literature can expose systematic reviews to publication bias [40].

Turnwall [34] documented a comprehensive search strategy that included search engines and bibliographic databases used, MeSH criteria, and a PRISMA flow chart outlining their inclusion and exclusion criteria allowing for replication by others [23]. Nelwati et al. [15] and Stone et al. [16], on the other hand, used flowcharts designed by themselves but with similar outcomes. They also both stated that they used a comprehensive search strategy. There was a statement of limitations and discussion, supporting that as the articles were not homogenous, a direct comparison was not possible by the authors. Inevitably, studies brought together for a systematic review will differ, and these may be defined as heterogeneous [49, 50]. Heterogeneity between studies can happen as different researchers use different methods of attaining the result necessary to answer the same question; thus without homogeneity a direct comparison cannot be made between studies trying to answer similar questions. Nelwati et al. [15] conducted a search of qualitative research and conducted a thematic analysis of their findings, and Turnwall [34] also found qualitative articles during their search and also conducted a thematic analysis. It is clear that qualitative research has a rich potential when it comes to healthcare interventions; however, using solely qualitative research in a systematic review is known to have issues such as researcher/publication bias [51]. This is also of particular note when acknowledging Turnwall [34] being a single author and rater.

3.3. Mixed Methods Study Design

Li et al. [35] used an extensive questionnaire using Q-methodology study, a methodology widely used in psychology and social sciences that uses both quantitative and qualitative methods to convert subjective points of view into objective outcomes [52]. Although Li et al. [35] did not explicitly define their order of data collection, they did state that during the interview stage, participants were interviewed for 60 minutes and given the statements to rank by significance. Q-methodology only requires a small number of participants, and they [35] initially recruited 58 participants, but 18 were excluded. This convenience sample of 40 undergraduate student nurses was interviewed by an independent researcher for around 60 minutes each as a measure to potentially reduce bias by influencing outcomes.

The concourse is a list of statements given to participants that allows them to express perspectives or responses to specific subjects [53]. Li et al. [35] initially had 79 statements but reduced them to 58 as some were ambiguous or overlapping. These statements were derived from their extensive literature review. They also used three educational experts and two nursing professors to assist in creating the statements. Participants were given pre-determined statements about a particular topic and asked to arrange them in order of ranking; for example, -5 strongly disagree to +5 strongly agree [40]. As participants were from one university, they acknowledged that the perspectives of the research did not offer a wider insight to other universities.

4. DISCUSSION

This narrative synthesis used a systematic review methodology to investigate ‘What are the most effective methods of assuring clinical skills acquisition in simulation settings in undergraduate student nurses, peer-to-peer or lecturer led methods?’ A rigorous search strategy was adopted to ensure all relevant literature was located, followed by a synthesis of the data found. Articles reviewed in this synthesis came from USA, Australia, China, Malaysia/Indonesia, and Korea, indicating that this is an issue of international relevance. Five were quantitative study designs, three were systematic reviews, and one mixed-method design, showing that there is no consistent approach as yet to researching this area.

| Domain | Main Theme | Sub-Themes | Authors |

|---|---|---|---|

| Psychological factors | Reduced anxiety for students. Improved self-confidence. |

Social interaction and friendship between years. Feeling anxious with lecturer or instructor present. Increased anxiety for peer-tutors. |

[15, 16, 31, 33, 35]. [15, 16, 30-33, 35]. |

| Motor skills | Increased confidence psychomotor skills. | Instructor-led yielded significant higher performance in skills. Confidence in their skills ability before approaching patients. |

[15, 16, 30-32, 35] |

| Educational issues | Cost effective. Active engagement, involving students in their learning. Instructor led received better feedback and satisfaction. Still a need for a lecturer to be present. |

Lecturer needed to be present and confirm competency. Cost effective alternative when lecture numbers are low. Instructor-led received better scoring than peer-led. Creative simulation before practice. Structured tool for feedback. Increased student satisfaction when Peer-teachers used. Anonymity of peer-reviewers helped to maintain a safe environment and avoid bias marking. Standardised patient for simulation. Peer-tutors need training. |

[16, 29, 30, 32, 33] [15, 16, 30, 32, 35] [29, 31, 33] [16, 29, 31-33] |

The findings of this narrative review highlight that although there has been research on peer-to-peer teaching and learning, research into how students ensure they acquire the necessary skills using peer-to-peer or instructor-led teaching in the simulation setting is more limited [54]. Positive and negative influences were identified: Positive factors were: an increase in self-confidence; increased confidence in cognitive and psychomotor skills; reduction in faculty numbers needed; and an active engagement in learning by students. Lecturers were still required, even when peer learning was in operation. Negative factors were: difficulty with large groups, inadequately prepared peer-tutors, and imposing faculty staff. These positive and negative factors inform three broad themes from this review: psychological factors, motor skills, and educational issues. The following Table 3 shows the themes and sub themes, and lists the authors that discussed them.

4.1. Psychological Factors

Anxiety is a key psychological factor related to skills acquisition. Although anxiety is a natural phenomenon experienced by learners, the level of anxiety can influence performance. Students experienced high levels of anxiety did not perform well, whereas students who experienced lower levels of anxiety performed better [20]. This demonstrates that although anxiety can affect a student’s performance, having low levels of anxiety can actually improve the student’s ability to perform. A decrease in anxiety levels was noted by all authors except four [29, 30, 32, 34]. Anxiety management in simulation settings could mean that students develop better self-confidence, and this is supported by all the studies reviewed here except two [29, 34].

Nelwati et al. [15] found that self-confidence is an important aspect for undergraduate nurses as it enables better care delivery that is more accurate and effective, and having a higher sense of self-confidence increases the accuracy of a student’s work and reduces the chances of mistakes [15]. Brannagan et al. [33] further discussed that peer-instruction was consistently found in research to increase a student’s self-confidence and is linked to Bandura’s [55] research into self-efficacy and a person’s ability to complete a given task. A possible connection [31] was the use of standardised patient scenarios to reduce anxiety and increase self-confidence. A systematic review of high-fidelity simulation experiences [56] further noted that there was a reduction in anxiety and an increase of self-confidence following the experience, and that it helped students cope with their nursing duties on real patients. Curtis et al. [32] further noted that students reported an increase in self-confidence following exposure to a mid-fidelity simulation experience. Having the self-confidence to perform also allowed students to become proficient before undertaking the same task on a real patient, as several authors noted this as a student concern. Either way, Kim and De Gagne [31] found no statistical differences between the groups that received peer-tutor support over those that did not. Therefore, the level of fidelity of the experience, small amounts of anxiety, and standardised patients could increase a student’s self-confidence to complete nursing tasks on real patients.

Both the reduction of anxiety and the increase of self-confidence fit into this narrative review’s aim to examine links between peer-to-peer and instructor-led comparisons on the acquisition of clinical skills. However, satisfaction differences between peer-to-peer and instructor-led instruction are demonstrated to have an impact on how well the peer-tutors were prepared for the task of teaching and offering feedback. Inadequately prepared peer-tutors have a negative impact on what the ethos of peer-to-peer is trying to achieve. Peer-to-peer teaching and learning offers a safe environment and increases a student’s social circle and networking opportunities [35], and also gives final year students confidence in their own skills before registration [15]. This is supported by Vygotsky’s theories of learning through social constructivism and how there are three major themes needed: social interaction, the more knowledgeable other, and the zone of proximal development [57]. All three of these are offered by using a peer-to-peer programme; however, a structured peer-tutor programme is necessary for all students and lecturers to gain the benefits of peer-to-peer teaching and learning [58]. Francis [58] also noted that a well-structured support programme helped to develop psychomotor skills for both tutors and tutees, and how there was an increase in self-confidence. Part of the support programme will be discussing its implementation and construction with experts in the field.

4.2. Motor Skills

Three studies [29, 21, 33] found that students had rated lecture-led debriefing higher than those receiving peer-led feedback following a simulation experience. Brannagan et al. [33] further discussed how there was no statistical difference between groups or an improvement in clinical skills beyond that of natural progression. In contrast, Roh et al. [29] compared debriefing between peer-led and instructor-led instruction following cardiopulmonary resuscitation training; however, they found that instructor-led debriefing received better student satisfaction following the experience than peer-led. Kim and De Gagne [31] also studied the differences between instructor-led and peer-led debriefing but noted that instructor-led debriefings were statistically higher when it came to quality, but no statistically significant differences in knowledge acquirement or self-confidence, even if there were marginal ones.

Regarding giving feedback, Turnwall [34] noted a student who had commented that feedback is the responsibility of the teacher and not the peer/student, showing that some students still feel the traditional method of feedback is appropriate. None of the papers addressing this issue [29, 31] indicated conclusively which was the most effective method, lecturer or peer feedback. Despite this, some students felt they still required a lecturer/instructor present during peer-tutoring sessions, even if they were only there for clarification and support [35]. Instructor-led debriefing was rated higher by students [31], but they also noted that instructor-led groups had a significantly higher performance in skills. However, many students felt that lecturers were imposing [35] and that they felt, ‘at ease’, and in a ‘safe and trusting environment’ with peer-tutors [34]. This demonstrates that there is a lack of clarity between needing faculty staff or not, developing an area that universities need to explore.

4.3. Educational Issues

Three of the studies [29-31] noted that there was a shortage of faculty staff, with and large student groups to teach. Therefore, peer-to-peer teaching may reduce the workload of lecturers, and this was noted as being a cost- effective method of instruction [16, 29], even if there was a lack of evidence for this [33]. Confidence and psychomotor skills acquisition, cognition, critical thinking, and problem solving were also in evidence [15] in simulation settings including peer and lecturer-led sessions. The fidelity of the simulation experience, low, medium, or high, was important. High fidelity simulation has been a topic of research within nurse education where barriers have been identified, such as cost and training, making them undervalued and underused [59]. One study [32] of students’ self-confidence in completing their nursing skills in a mid-fidelity experience found that students self-reported a high self-confidence following the simulation experience.

Penson [60] described that students need to understand the reasons associated with learning objectives, why they have been selected, and be able to apply knowledge acquired in the classroom (simulation setting) to real life situations (practice setting), thus bridging the theory/practice gap; a learning strategy that constructivism encourages [61]. Active engagement of students is also important: actively engaging students in their education was noted by five of the authors as a key factor in peer-led teaching and learning [15, 16, 30, 32, 35]. By engaging students as active learners, the evidence suggests that we can improve critical thinking, an important factor of peer-teaching and learning. This is a positive outcome and an important trait for nurses. These skills were noted by all authors [29], and indeed two of the studies in this review found that simulation experience encourages active engagement of students, even when it is peer-led [31, 35].

4.4. Synthesis and Evaluation

In summary, there are both positives and negatives in peer-to-peer teaching and learning; however, there was little evidence to suggest if it is 'better' than lecturer-led clinical skills teaching in the simulated setting.

The evidence suggests that peer-to-peer teaching and learning can be a valuable alternative to faculty-led teaching, as it can create a bond between student peers and can reduce anxiety, and increase a student’s ability to learn through socialisation and peer support.

Although peer-to-peer teaching and learning should not completely replace instructor faculty-led teaching, it has been noted that it can help reduce the faculty staffing commitment needed to support simulation experiences, something that some of the literature reviewed indicated beneficial. Instructors/Lecturers will always need to be present to confirm consistency and accuracy of the skills being taught but allowing students to lead them in their own education, creating a student-focused curriculum designed to nurture students towards their peer-tutor role.

The studies included in this review were not homogenous, which does not allow for direct comparison, meaning that they are placed in the observational category of the Grading of Recommendations Assessment Development and Evaluation (GRADE) [44]. However, they are appropriate for answering the questions they set out. Although these articles are categorised as ‘Low’ within GRADE [62], they could be seen as ‘high’ in relation to the question they were researching. Two studies made specific reference to lack of generalisability [31, 35]. With the heterogeneity in the chosen articles, there will be difficulties in synthesising recommendations expected from a meta-analysis or systematic review. However, a synthesis of the findings can be made in the form of a narrative synthesis despite Purssell [44] describing them as ‘Low’ in quality. Furthermore, as many authors stated that there is a reduction of anxiety and an increase in self-confidence, it would be remiss to ignore these articles based on a low-quality prediction of Purssell [44], especially when there could be a positive outcome that could influence students’ learning and creating learning packages.

GRADE is not used to appraise individual articles, but is used to grade the strength and quality of evidence used in systematic reviews by upgrading or downgrading [47]. GRADE is an internationally recognised tool; however, it can be problematic if the question or intervention is poorly constructed; for example, vague descriptions can become challenging as they lack specific terms [47], such that the terminology ‘high’, ‘moderate’, ‘low’ or ‘very low’ should be used by the author/s as a method of appraising the quality of the body of evidence selected by the raters [63, 64]. Purssell [44] recommends that RCTs are awarded ‘high’ and observational studies awarded ‘low’, this is irrespective of the quality of the evidence. This narrative review did not find any RCTs, and therefore the data collected is defined as being low [47] in quality as the studies were from quasi-experimental designs. Observational studies, surveys or cohort studies, systematic/integrated reviews, and cohort research are all classified as low quality, meaning that further research is likely to have an important impact on our confidence in the estimate of effect and is likely to change the estimate. Systematic reviews should be considered as being more rigorous. However, those included here lacked comparable outcomes, which made meta-analysis not possible.

4.5. Limitations of the Review

This review has been conducted using appropriately systematic and rigorous processes, with a thorough and exhaustive literature search including grey literature, blinded quality appraisal by three reviewers, and comprehensive critical appraisal of the results of the search. Three limitations are apparent, firstly that only papers written in the English language could be included because of a lack of translation services. This is a potential source of potential bias, although we argue that this is not significant given that most international academic publishing takes place in English. Secondly, as the results were heterogeneous, we were not able to test for publication bias in the literature or construct funnel plots to do so. This is unavoidable given the embryonic nature of the evidence-base assessed. Thirdly, while we have shown that there are benefits of peer-to-peer teaching and learning of clinical skills, there are also negative factors. This impacts our ability to quantify the initial review question, which concerned effectiveness; however, this too is unavoidable.

4.6. Recommendations

By implication, this review is a starting point for further research into student nurses’ acquisition of clinical skills in the simulated setting, mediated by peers or lecturers as educators. Standardisation of study designs and outcome measures are required to facilitate further systematic reviews and meta-analysis to analyse and synthesise the evidence base as it evolves.

From the evidence appraised, it could be concluded that all universities could use peer-tutors in simulation settings to reduce anxiety and increase students' self-confidence; aiming at the highest possible fidelity of simulation experiences to reduce anxiety and increase self-confidence.

One recommendation, therefore, is that a standardised curriculum for peer learning for skills acquisition be developed. This would allow students to learn the fundamentals of being a peer-tutor before stepping into that role. This may also alleviate some of the issues raised by Li et al. [63], who noted that some peer-tutors did not feel very well prepared for the role of being a peer-tutor. This was also highlighted by Nelwati et al. [17], who used tutees to ask peer-tutors questions during their training to enable them to gain the experience of answering questions before doing the role, something that peer-tutors had concerns about.

CONCLUSION

This review followed systematic process to find all available evidence on the subject and found a heterogeneous evidence base. Although this review has found that there can be a reduction in anxiety, an increase in self-confidence and critical thinking, along with a possibility of reducing faculty staffing needed, there is still a lack of quality research to support whether lecturer-led or peer-led clinical skills teaching improves skills acquisition. There is no definitive answer to the review question, rather more of a suggestion of possible benefits and an indication of the themes addressed by scholars; these being psychological factors, motor skills, and educational issues. Combined with the introduction of either a low level or moderate level fidelity simulation, we argue that students could potentially achieve better skill acquisition during a simulation experience. With this said, there is still the need for a member of staff/faculty/clinical demonstrator to ensure parity amongst the peer-tutors and the skills they will be demonstrating.

LIST OF ABBREVIATIONS

| AMED | = Allied and Complementary Medicine Database |

| CINAHL | = Cumulative Index to Nursing and Allied Health Literature |

| HEI | = Higher Education Institution |

| JBI | = Joanna Briggs Institute |

| NMC | = Nursing and Midwifery Council |

| PICO | = Population Intervention Comparator Outcome |

| RCT | = Randomised Controlled Trial |

CONSENT FOR PUBLICATION

Not applicable.

AVAILABILITY OF DATA AND MATERIALS

The data supporting the findings of the article is available from the corresponding author [G.W.] on reasonable request.

FUNDING

This project was a Master’s dissertation and funded from University of Plymouth School of Nursing and Midwifery staff development funds.

CONFLICT OF INTEREST

Dr. Williamson is the Editor-in-Chief of The Open Nursing Journal.

ACKNOWLEDGEMENTS

Declared none.